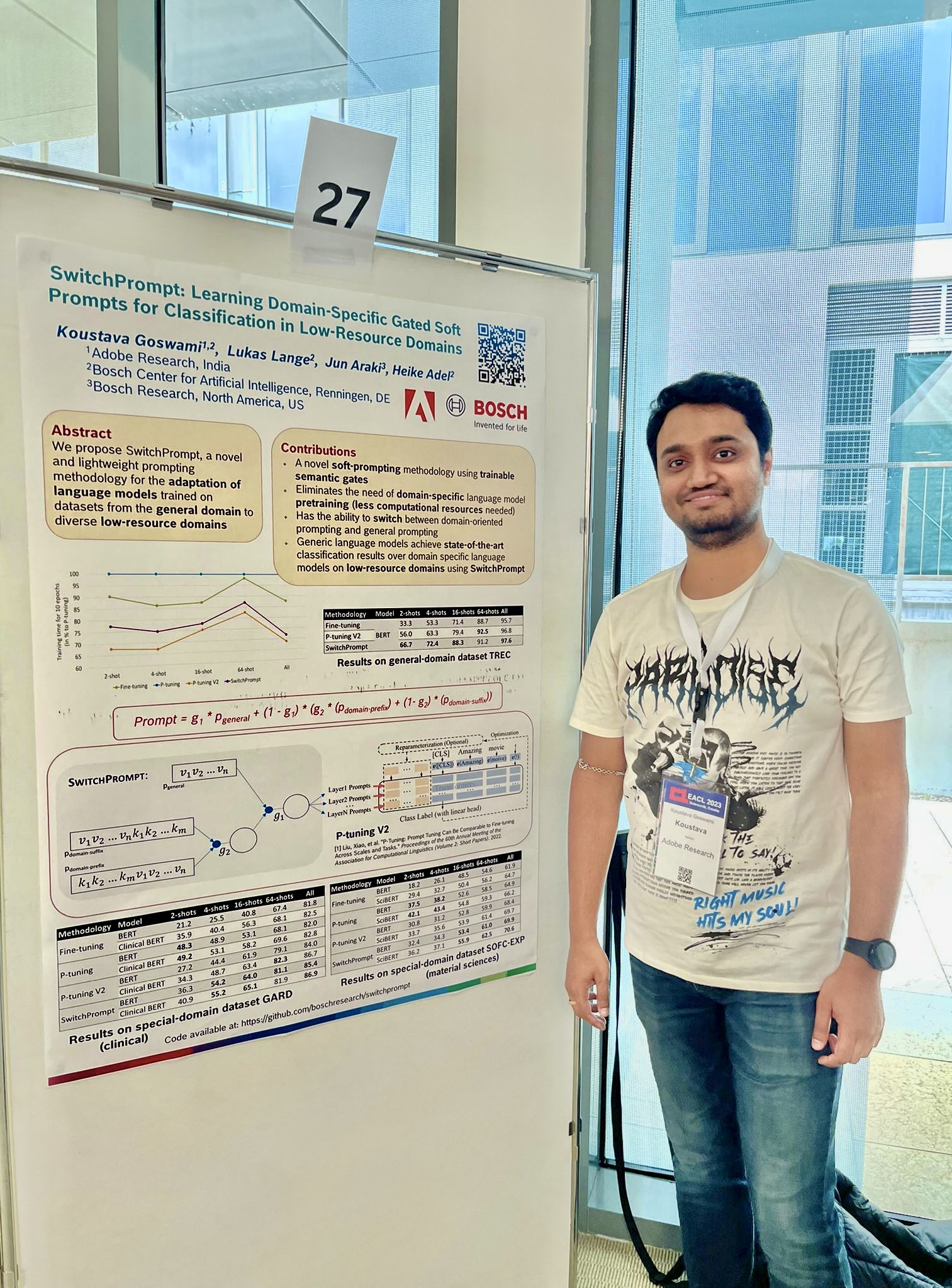

Koustava Goswami

Research Scientist 2 at Adobe Research

🏆 His work on Acrobat AI Assistant has been covered by multiple newspapers and channels when named one of TIME's Best Inventions of 2024

Currently developing GenAI features for Adobe Acrobat Studio. He is the core researcher behind Acrobat AI Assistant, working on cutting-edge Natural Language Processing and Artificial Intelligence technologies.